It was dull, familiar in the 1990s, with law enforcement in movies and television making a pixelated, blurry image and pressing the magic “enhance” button to reveal suspects facing justice. Creating data where it simply didn’t exist before was a great way to break the immersion for anyone with little technical experience and ruin a lot of movies and TV shows.

Of course, technology is advancing, and what was once a complete impossibility often becomes trivial in time. Nowadays, a computer under $ 100 is expected to be able to easily distinguish between a banana, a dog and a human, something that was unattainable at the dawn of the microcomputer era. This ability is rooted in neural network technology, which can be trained to perform any task that was previously considered difficult for computers.

With neural networks and a lot of processing power, there are streams of projects aimed at “improving” everything from low-resolution human faces to old movie footage, increasing the resolution and filling in data that simply doesn’t exist. But what’s really going on behind the scenes, and can this technology really improve anything?

An assumption is formed

We’ve imagined neural networks to do such feats before, such as the DAIN algorithm, which increases the frames to 60 frames per second. Others, such as [Denis Shiryaev], combine different tools for coloring old frames, smoothing frame rates and prestigious resolutions up to 4K. Neural networks can do all this and more, and basically, the method is the same at the basic level. For example, to create a neural network to increase frames to 4K resolution, you must first be trained. The web learns from pairs of low-resolution photo images and the corresponding high-resolution original. It then tries to find transformation parameters that take the low-resolution data and give a result corresponding as closely as possible to the high-resolution original. Once properly trained for a large enough number of images, the neural network can be used to apply similar transformations to other materials. The process is similar to increasing the frame rate and even coloring. Display online color content, and then display the black-and-white version. With enough training, he can develop algorithms for applying probable colors to other black-and-white frames.

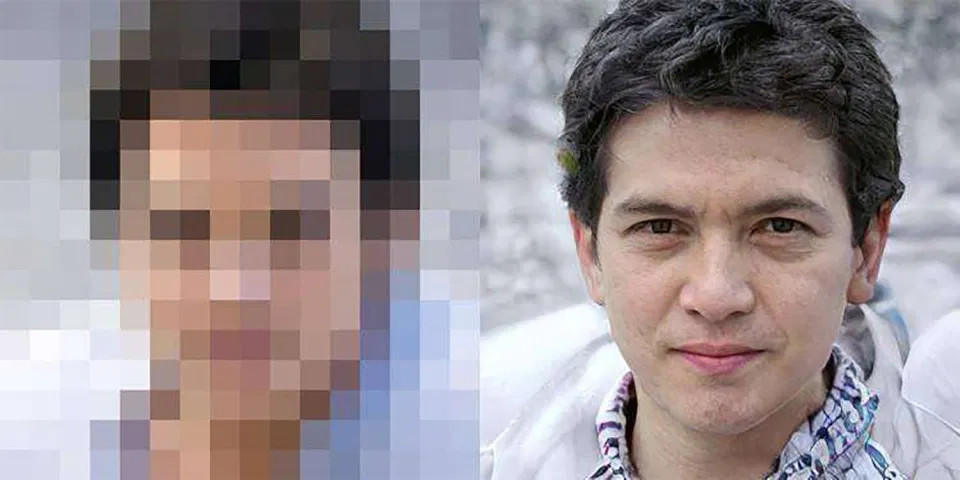

The important thing to note about this technology is that it just uses a rich experience base to produce what it thoughts appropriate. It’s no different than watching a movie and guessing at the end, having seen many similar tropes in other movies before. There is a high probability that the assumption is in the ball, but it does not guarantee that it is 100% true. This is a common thread when using AI to zoom in, as explained by the team behind the PULSE facial imaging tool. The PULSE algorithm synthesizes an image based on a very low resolution input of a human face. The algorithm makes the best guess about what the original faces might look like based on the data from its training set, checking its performance by zooming in to see if the result matches the original low-resolution input. There is no guarantee that the generated person has any real resemblance to the real one, of course. The high resolution output is just a computer idea for a realistic human face could are the source of the low-resolution image. The technique is even applied to textures of video games, but the results can be mixed. The neural network does not always make the right assumption, and often a person who is in a cycle needs to refine the outcome for best results. Sometimes the results are fun, However.

It remains a universal truth that when working with low-resolution images or black-and-white frames, it is not possible to fill in data that is not there. It happens that with the help of neural networks we can make excellent assumptions that may seem real to a casual observer. The limitations of this technology appear more often than you might think. For example, coloring can be very effective for things like city streets and trees, but it performs very poorly for others, such as clothing. The leaves are usually green, while the roads are usually gray. However, the hat can be any color; while a rough image of a shadow can be obtained from a black-and-white image, the exact hue is lost forever. In these cases, neural networks can only strike in the dark.

For these reasons, it is important not to consider shots “enhanced” in this way as historically significant. Nothing generated by such an algorithm can be conclusively grounded in truth. Take, for example, a colored film at a political event. The algorithm can change fine details, such as the color of the lapel or banner, creating a suggestion of attachment without a factual basis. Zoom algorithms can create individuals with incredible resemblance to historical figures who may never have been present at all. In this way, archivists and those working on the restoration of old footage avoid such tools as anathema to their cause of maintaining an accurate record of history.

True perceived quality is also a problem. Comparison of the 4K extended film from Paris in 1890 just fades compared to footage taken with a real 1080p camera in New York in 1993.. Even the best assumption of a powerful neural network struggles to be measured with high-quality raw data. Of course, one has to report an improvement in camera technology worth over 100 years, but nonetheless, neural networks will not replace quality camera equipment any time soon. There is simply no substitute for capturing high quality good data.

Conclusion

There are applications for “improvement” algorithms; we can imagine Hollywood’s interest in resizing old footage for use in old works. However, the use of such techniques for purposes such as historical analysis or law enforcement purposes is simply out of the question. Computer-generated data simply has no real connection to reality and therefore cannot be used in such truth-seeking fields. However, this will not necessarily stop someone from trying. Thus, understanding the basic concepts of how these tools work is key for anyone who wants to see through smoke and mirrors.